source: thewishwall.org

We discussed persistent collectives at the MPI Forum last week. It was a great meeting and the discussions were very insightful. I really like persistent collectives and believe that MPI implementors should support them!

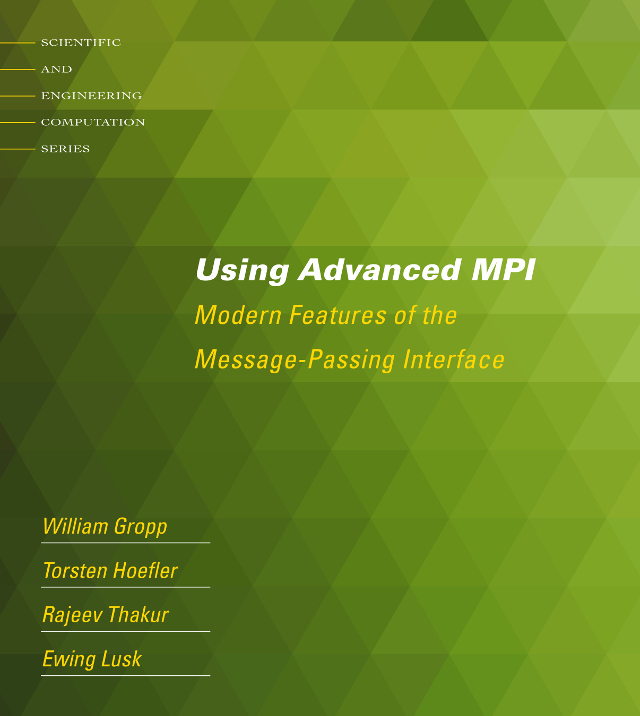

In that context, I wanted to note that implementors can do this easily and elegantly in MPI-3 without any changes to the standard. We used this technique already in 2012 in the paper “Optimization Principles for Collective Neighborhood Communications”. But let me recap the idea here.

The key ingredients are communicators (MPI’s name for immutable process groups) and Info objects. Info objects are a mechanism for users to pass additional information about how he/she will use MPI to the library. Info objects are very similar to pragmas in C/C++. Some info strings are defined by the standard itself but MPI libraries may add arbitrary strings to it.

So one way to specify a persistent collective is now to duplicate the communicator to create a new name, e.g., my_persistent_comm. At this communicator, the user can specify a info object to make specific operations persistent, e.g., mympi_bcast_is_persistent. The MPI library is encouraged to choose a prefix specific to itself (in this case “mympi”).

The library can now set a flag on the communicator that is checked at broadcast calls whether they are persistent. By passing this info object, the user guarantees that the function arguments passed to the specific call (e.g., bcast) on this communicator will always be the same. Thus, the MPI library can make the call specific to the arguments (i.e., implement all optimizations possible for persistence) once it has seen the first invocation of MPI_Ibcast().

This interface is very flexible, one could even imagine various levels of persistence as defined in our 2012 paper: (1) persistent topology (this is implicit in normal and neighborhood collectives), (2) persistent message sizes, and (3) persistent buffer (sizes and addresses). We describe in the paper optimizations for each level. These levels should be considered in any MPI specification effort.

I agree that having some official support for persistence in the standard would be great but these levels and info arguments should at least be discussed as alternative. It seems like big parts of the MPI Forum are not aware of this idea (this is part of why I write this post 😉 ).

Furthermore, I am mildly concerned about feature-inflation in MPI. Adding more and more features that are not optimized because they are not used, because they have not been optimized, because they were not used …. maay not be the best strategy. Today’s MPIs are not great at asynchronous progression of nonblocking collectives, and the performance of neighborhood collectives and MPI-3 RMA is mostly unconvincing. maybe the community needs some time to optimize and use those features. At the 25 years of MPI symposium, it became clear that big parts of the community share a similar concern.

Keep the great discussions up!