Twelve ways to fool the masses when reporting performance of deep learning workloads

Torsten Hoefler

Due to it’s wide-spread success in many hard machine learning tasks, deep learning quickly became one of the most important demanding compute workloads today. In fact, much of the success of deep learning stems from the high compute performance of today’s devices (and the massive amounts of data available). Despite the high compute capabilities, important tasks can take weeks to train in practical settings. When it comes to improve the performance of deep learning workloads, the HPC community plays an important role — in fact, high-performance accelerators as well as high-performance networks that enable the necessary massive parallel computation have both been developed and pioneered in the context of high-performance computing. The similarity of deep learning workloads and more traditional dense linear algebra — both expressable as tensor contractions (modulo some simple nonlinearities) — is striking.

It seems thus natural that the HPC community embarks in the endeavour to solve larger and larger learning problems in industrial and scientific contexts. We are just at the beginning of potential discoveries to be made by training larger and more complex networks to perform tasks at super-human capabilities. One of the most important aspects in HPC is, as the middle-name suggests, performance. Thus, many of the conferences, competitions, and science results focus on the performance aspects of a computation. Today, most of the performance improvement stems from growing parallelism, coming from wider vectorization, multi-threading, many-core, in the form of accelerators with massively parallel units, or large-scale parallelism at the cluster level. Accurately reporting and arguing about performance of today’s complex systems is a daunting task and requires scientific rigor, as explained in our earlier paper “Scientific Benchmarking for Parallel Computing Systems”.

Yet, in the machine learning community, the spotlight belongs is on the capability of a model to perform useful predictions and performance is mainly a catalyst. Learning workloads are usually of a statistical nature and thus relatively resistant to perturbations in the data and the computation. Thus, in general, one can trade off accuracy with performance. It’s trivially clear that one can train a model faster when using less data — however, the quality suffers. Many other, more intricate, aspects of training can be accelerated by introducing approximations, for example, to enable higher scalability. Many of these aspects are new to HPC and somewhat specific to (deep) learning and the HPC community may lack experience to assess performance results in this area.

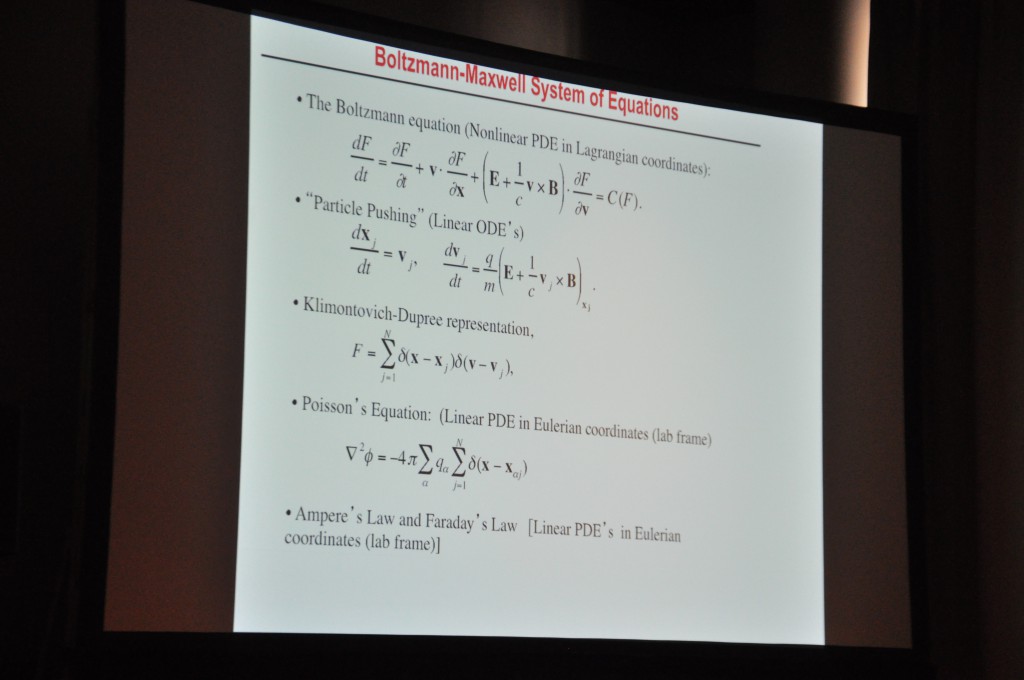

I collected these thoughts over the last two years and was motivate to finalize them during the IPAM workshop “HPC for Computationally and Data-Intensive Problems” organized by Joachim Buhmann, Jennifer Chayes, Vipin Kumar, Yann LeCun, and Tandy Warnow. Thanks for the great discussions during the workshop (and sorry the discussion after that last evening talk took much longer than planned). I updated this post with thoughts brough up during the discussion and thank all participants!

Here, we report in a humorous way on some ways to “improve” ones performance results (“floptimization”) when reporting performance of deep learning workloads. Any similarity with existing papers or competitions is of course purely by chance :-)!

1) Ignore accuracy when scaling up!

Our first guideline to report highest performance is seemingly one of the most common one. Scaling deep learning is very tricky because the best performing optimizer, stochastic gradient descent (SGD), is mostly sequential. Model parallelism can be achieved by processing the elements of a minibatch in parallel — however, the best size of the minibatch is determined by the statistical properties of the process and is thus limited. However, when one ignores the quality (or convergence in general), the model-parallel SGD will scale wonderfully to any size system out there! Weak scaling by adding more data can benefit this further, after all we can process all that data in parallel. In practice, unfortunately, test accuracy matters, not how much data one processed.

One way around this may be to only report time for a small number of iterations because, at large scale, it’s too expensive to run to convergence, right?

2) Do not report test accuracy!

The SGD optimization method optimizes the function that the network represents to the dataset used for learning. This minimizes the so called training error. However, it is not clear whether the training error is a useful metric. After all, the network could just learn all examples without any capability to work on unseen examples. This is a classic case of overfitting. Thus, real-world users typically report test accuracy of an unseen dataset because machine learning is not optimization!

Yet, when scaling deep learning computations, one must tune many so called hyperparameters (batch size, learning rate, momentum, …) to enable convergence of the model. It may not be clear whether the best setting of those parameters benefits the test accuracy as well. In fact, there is evidence that careful tuning of hyperparameters may decrease the test accuracy by overfitting to a specific problem.

3) Do not report all training runs needed to tune hyperparameters!

Of course, hyperparameters heavily depend in the dataset and the network used for training. Thus, optimizing the parameters for a specific task will enable you to achieve highest performance. It’s not clear whether these parameter values are good for training any other model/data or if the parameters themselves are overfitted to the problem instance :-). Thus, after consuming millions of compute hours to tune specific hyperparameters, one simply reports the number of the fastest run!

4) Compare outdated hardware with special-purpose hardware!

A classic one, but very popular in deep learning: make sure to compare some old single-core CPU to your new GPU-tuned algorithm. Oh, and if you have specialized hardware then make sure to never compare to the latest available GPU but pick one from some years back. After all, that’s when you started developing, right?

5) Show only kernels/subsets when scaling!

Another classic that seems to be very popular. For example, run the operations (processing layers, communicating, updating gradients) in isolation and only report scaling numbers of those. This elegantly avoids questions about the test accuracy, after all, one just worries about a part of the calculation, no?

6) Do not consider I/O!

The third classic — deep learning often requires large amounts of data. Of course, when training on a large distributed system, only the computation matters, no? So loading all that data can safely be ignored :-).

7) Report highest ops numbers (whatever that means)!

Exaops sounds sexy, doesn’t it? So make sure to reduce the precision until you reach them. But what if I tell you that my laptop performs exaops/s if we consider the 3e9 transistors switching a binary digit each at 2.4e9 Hz? I have an exaops (learning) appliance and I’ll sell it for $10k! Basically the whole deal about low-precision “exaops” is a marketing stunt and should be (dis)regarded as such – flops have always been 64 bits and lowering the precision is not getting closer to the original target of exascale (or any other target). What’s even better is to mention “mixed precision” but never talk about what fraction of the workload was performed at what precision :-).

This is especially deceiving when talking about low precision flop/s – a nice high rate of course but we won’t talk about how many more of those operations are needed to achieve convergence as long as we have a “sustained” xyz-flop/s. It’s application progress, isn’t it?

8) Show performance when enabling option set A and show accuracy when enabling option set B!

From the discussion above, it’s obvious that readers may expect you to report both, accuracy and performance. One way to report highest performance is now to report performance for the best performance configuration and accuracy for the most accurate one.

One may think that this is an obvious no-no but I was surprised how many examples there are.

9) Train on unreasonably large inputs!

This is my true favorite, the pinnacle of floptimization! It took me a while to recognize and it’s quite powerful. The image classification community is almost used to scaling down high-resolution images to ease training. After all, scaling to 244×244 pixels retains most of the features and gains a quadratic factor (in the image width/hight) of computation time. However, such small images are rather annoying when scaling up because they require too little compute. Especially for small minibatch sizes, scaling is limited because processing a single small picture on each node is very inefficient. Thus, if flop/s are important then one shall process large, e.g., “high-resolution”, images. Each node can easily process a single example now and the 1,000x increase on needed compute comes nicely to support scaling and overall flop/s counts! A win-win unless you really care about the science done per cost or time.

In general, when procesing very large inputs, there should be a good argument why — one teraflop compute per example may be excessive.

10) Run training just for the right time!

When showing scalability with processors make sure to show training for a fixed wall-time. So you can cram twice as many flop/s on twice as many processors. Who cares about application/convergence speedup after all as long as we have flop/s? If your convergence plots behave oddly (e.g., diverge after some time), just cut them off at random points.

If this is all too complex, then just separate speedup plots from convergence plots. Show convergence plots for the processor counts where they look best and scalability plots to of course much larger numbers of processes! There are also many tricks when plotting number of epochs with varying batch size and varying numbers of processes (when the batch size changes the number of iterations).

In general, now seriously, convergence speed should always be bound to the number of operations (i.e., epochs or number of examples processed).

11) Minibatch sizing for fun and profit – weak vs. strong scaling.

We all know about weak vs. strong scaling, i.e., the simpler case when the input size scales with the number of processes and the harder case when the input size is constant. At the end, deep learning is all strong scaling because the model size is fixed and the total number of examples is fixed. However, one can cleverly utilize the minibatch sizes. Here, weak scaling keeps the minibatch size per process constant, which essentially grows the global minibatch size. Yet, the total epoch size remains constant, which causes less iterations per epoch and thus less overall communication rounds. Strong scaling keeps the global minbatch size constant. Both have VERY different effects in convergence — weak scaling worsens convergence eventually because it reduces stochasiticity and strong scaling does not.

In seriousness, however, microbatching that doesn’t change the statistical convergence properties is always fine.

12) Select carefully how to compare to the state of the art!

Last but not least, another obvious case: very often, deep learning is used as a replacement for an existing technique. If this is the case, you should only compare accuracy *or* performance. Especially if it’s unlikely that your model is good in both ;-).

Here are the slides presented at the IPAM workshop.