Andrea presents our paper on how to achieve bitwise reproducibility for floating point arithmetic on any architecture for cheap (nearly free). See more details in the paper.

May232014

Andrea presents our paper on how to achieve bitwise reproducibility for floating point arithmetic on any architecture for cheap (nearly free). See more details in the paper.

May102014

So we did it again, we celebrated Greg’s, Maciej’s, and Bogdan’s recent successes with a barbecue. But we were not SPCL if it was any barbecue … of course it was at the top of a mountain, this time the “Grosse Mythen”.

Remember v1.0 to Mount Rigi? Switzerland is absolutely awesome! Mountains, everything is green, ETH :-).

Here are some impressions:

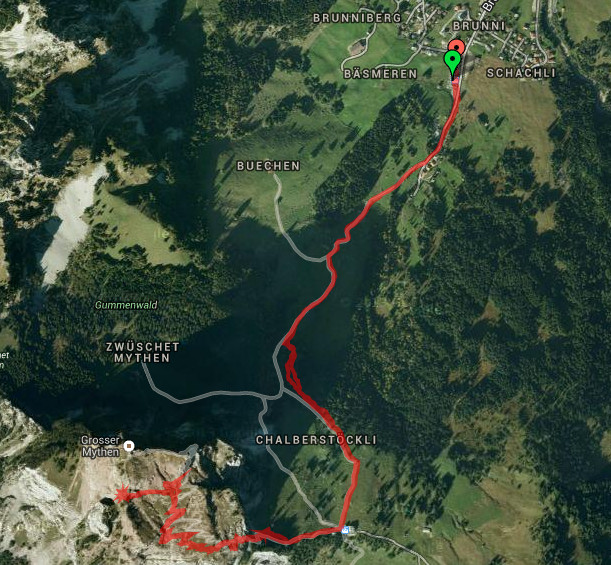

The complete tour was 6.9 kms a total walking time of 3.35 hours and an altitude difference of only 750m (from 1149m to 1899m). Much shorter and less altitude then our last trip. But not less fun! And yes, my GPS was a bit off :-/.

So this is how it looked from the start — I have to say pretty impressive. But it turned out to be much much simpler than it seems, and MUCH simpler than Rigi was :-).

Do you see the flag on top (yeah, it’s the red pixel on the right side in this resolution)? That’s where we’ll hike!

Our first real view towards Brunni.

The path is actually at the beginning a bit more stressful than later but overall simple.

Lots of planes around Mythen, some seem rather historic, like this one.

First stage done — arrived at Holzegg and view down to Brunni (yeah, we could have taken the cable car but are we men or what?). SPCLers walk up mountains!

Now the steep part begins, it’s actually a bit dangerous — many places where you can fall a couple of 100 meters :-).

Mac still looks too good … we should have taken more water and food :-). Remember Rigi?

The path is basically vertically (in serpentines) up a rock wall. Nice! You should not be afraid of height …

… because you will constantly see beautiful things like this …

… or horror abysses right at the trail like this :-).

But there were helpers, these nice chains probably saved out life more than once.

Mac acquired a second backpack and some stones on the way … and he’s still looking too good!

A nice bench … again, for people not afraid of height.

The neighboring “Kleiner Mythen” is apparently much harder to climb. And it’s smaller, so why would we climb it anyway!? 😉

The path – awesome! Walking a nice and thin ridge.

And again, some nice opportunities to fly, aehem fall.

Still snow around the top in May.

The top – we made it! Most importantly: the Swiss flag :-).

The last ascent to the very top … still looking too fresh!

We all made it to the top alive (and later back down).

Relaxing with a beer … tststs.

Meanwhile food preparations start.

The grill didn’t start that well … we should have sent smoke signals down to the valley ;-).

Some folks took shifts in blowing.

We brought some nice food … to the nice view!

To avoid fights with the locals!

Finally the grill started … took 30 mins or so ;-).

Beautiful views, did I mention that it was rather high?

But most beautiful views — Rigi was just left of this.

And of course, if you travel with a Polak, you’ll get some top-vodka.

The car was waiting patently in Brunni.

Many opportunities for free falls :-).

I drove, and we made some contact with cows :-).

Others took the bus back home.

After all, *awesome* and very efficient, the whole tour took 7.5 hours :-). So we set some new standards for the SPCL hike v 3.0!

Feb12014

I wanted to highlight the ExaMPI’13 workshop at SC13. It was a while ago but it is worth reporting!

The workshop’s theme was “Exascale MPI” and the workshop addressed several topics on how to move MPI to the next big divisible-by-10^3 floating point number. Actually, for Exascale, it’s unclear if it’s only FLOPs, maybe it’s data now, but then, we easily have machines with Exabytes :-). Anyway, MPI is an viable candidate to run on future large-scale machines, maybe at a low level.

A while ago, some colleagues and I summarized the issues that MPI faces in going to large scale: “MPI on Millions of Cores“. The conclusion was that it’s possible to move forward but some non-scalable elements need to be removed or avoided in MPI. This was right on topic for this workshop, and indeed, several authors of the paper were speaking!

The organizers invited me to give a keynote to kick off the event. I was talking about large-scale MPI and large-scale graph analysis and how this could be done in MPI. [Slides]

The very nice organizers sent me some pictures that I want to share here:

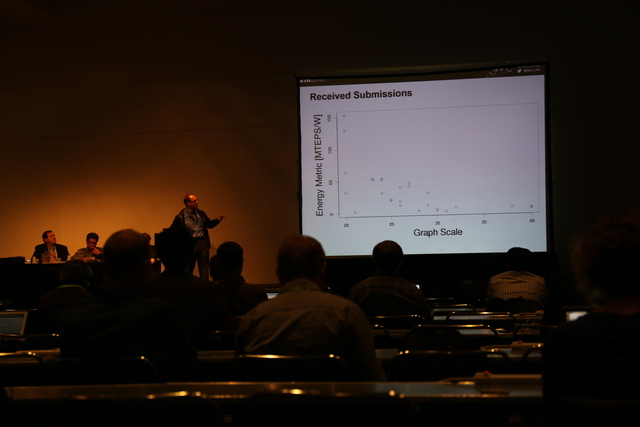

My keynote on large-scale MPI and graph algorithms.

The gigantic room was well filled (I’d guess more than 50 people).

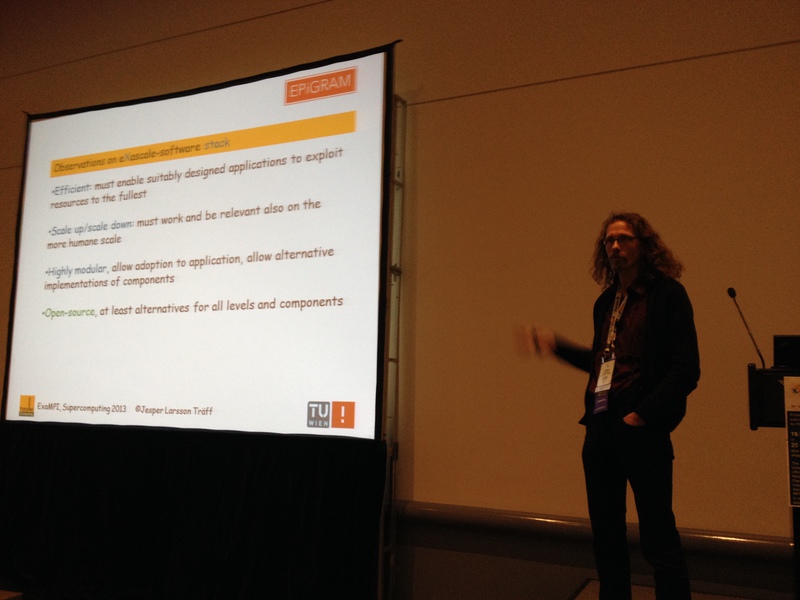

Jesper talking about the EPIGRAM project to address MPI needs for the future large scales.

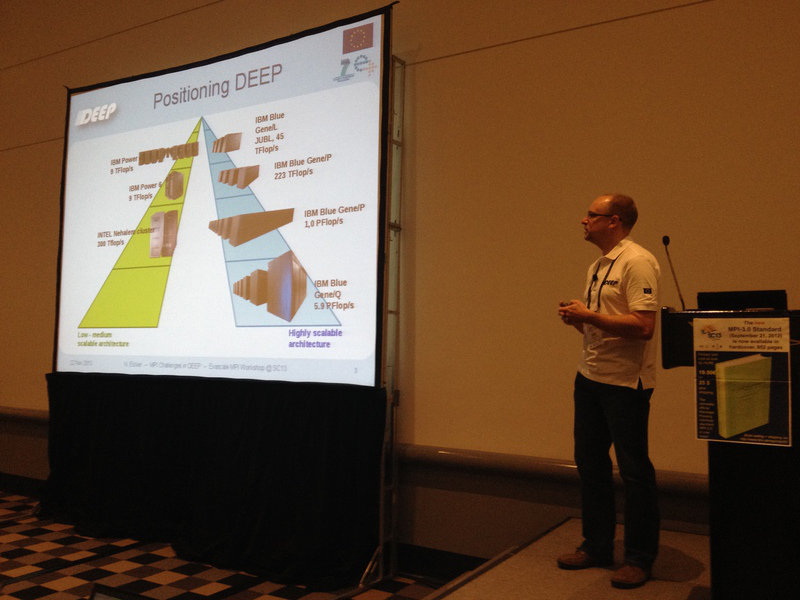

The DEEP strategy of Julich using inter-communicators (the first users I know of).

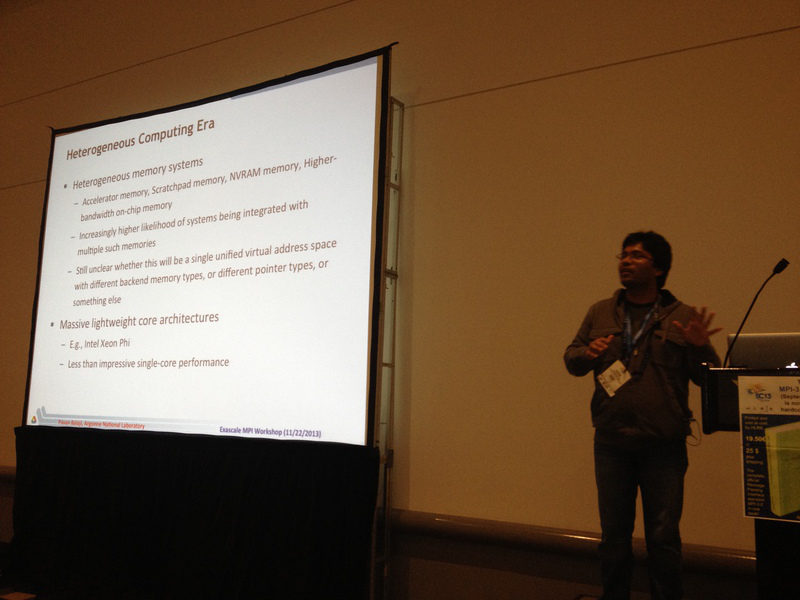

Pavan on our heterogeneous future, very nice insights.

All in all, a great workshop with a very good atmosphere. I received many good questions and had very good discussions afterwards.

Kudos to the organizers!

Jan262014

This year, I attended my first HiPEAC conference. We had a paper at the main track. It was in Vienna and thus really easy to reach (1 hour by plane). I actually thought about commuting from Zurich every day.

Bogdan presented the paper and did a very good job! I received several positive comments afterwards. Kudos Bogdan!

Dec22013

Supercomputing is the premier conference in high performance and parallel computing. With more than 10,000 attendees, it’s also the largest and highest-impact conference in the field. Literally everybody is there. Torsten summarized the role of the conference in a blog poster over at CACM.

SPCL had a great year at Supercomputing 2013 (SC13)! We’ve been involved in multiple elements of the technical program:

Here are some impressions:

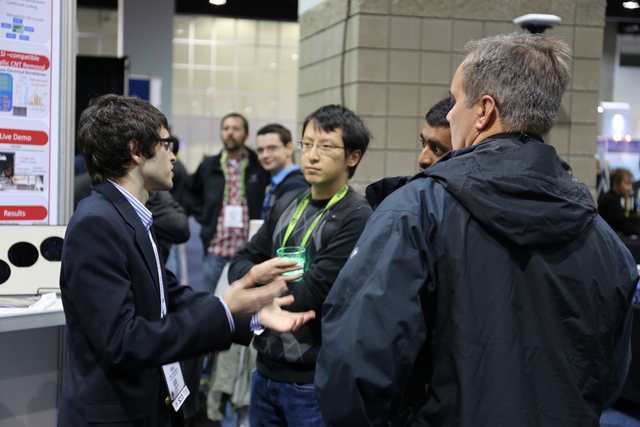

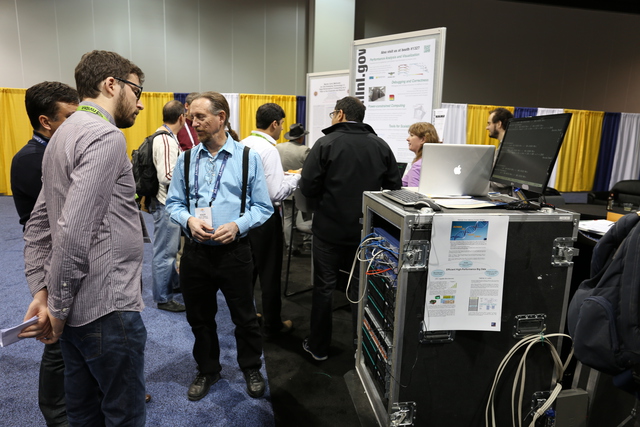

Impressions from the Emerging Technologies Booth

Impressions from the Emerging Technologies Booth

Impressions from the Emerging Technologies Booth

Impressions from the Emerging Technologies Booth

Emerging Technology Booth Talks were generally well attended!

Maciej and his session chair (Rajeev Thakur) are preparing for the presentation of our best paper candidate.

The large room was packed (this shows only the right half, left was as full).

Robert and Maciej fielding questions.

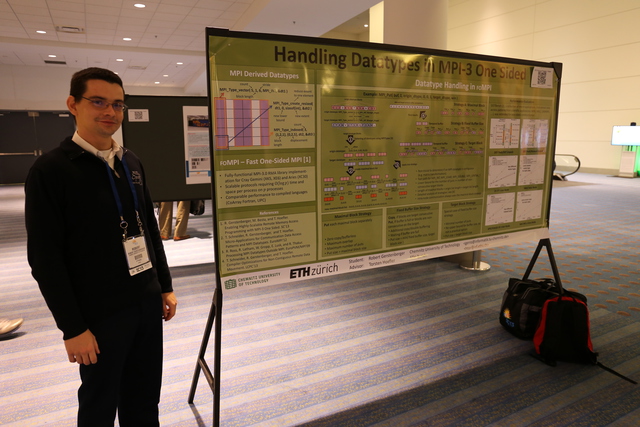

Robert presenting his poster … unfortunately, Maciej didn’t take a picture of Aditya and his poster which was upstairs (and looked at least as good :-)).

Torsten releasing the 2nd Green Graph 500 list with surprises in the top ranks!

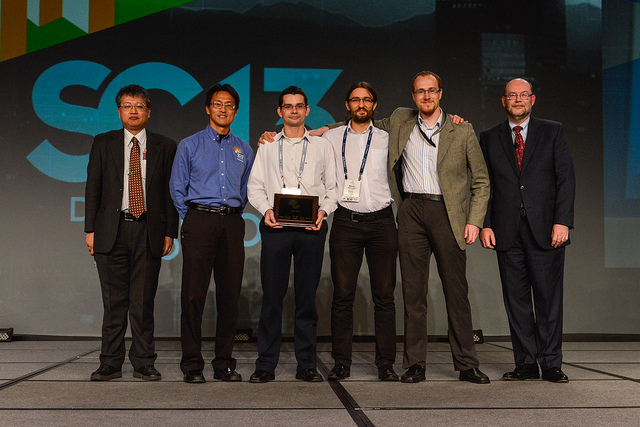

The SC13 Best Paper team and presentation!

Torsten receives his “Young Achievers in Scalable Computing” Award.

Torsten receives his “Young Achievers in Scalable Computing” Award.

Robert received the ACM SRC Bronze medal.

All in all – a great success! Congrats and thanks to everyone who contributed!

Some more nice pictures can be found here.

Nov172013

A new element in this year’s Supercomputing SC13 conference, Emerging Technologies, is emerging at SC13 right now. The booth of impressive size (see below) features 17 diverse high-impact projects that will change the future of Supercomputing!

Emerging Technologies (ET) is part of the technical program and all proposals have been reviewed in an academically rigorous process. However, as opposed to the standard technical program, ET will be located at the main showfloor (booth #3547). This enables to demonstrate technologies and innovations that would otherwise not reach the showfloor.

The standing exhibit is complemented by a series of short talks about the technologies. Those talks will be during the afternoons on Tuesday and Wednesday in the neighboring “HPC Impact Showcase” theater (booth #3947).

Check out http://sc13.supercomputing.org/content/emerging-technologies for the booth talks program!

Bob Lucas and I have been organizing the technical exhibit this year and Satoshi Matsuoka will run it for SC14 :-).

So make sure to swing by if you’re at SC13. It’ll definitely be a great experience!

Nov102013

Today I typed the last command on my long-running server (serving www.unixer.de since 2006 until yesterday):

benten ~ $ uptime 01:31:47 up 676 days, 16:20, 5 users, load average: 2.08, 1.42, 1.39 benten ~ $ dd if=/dev/zero of=/dev/hda & benten ~ $ dd if=/dev/zero of=/dev/hdb & benten ~ $ dd if=/dev/zero of=/dev/hdc &

This machine was an old decommissioned cluster node (well, a result of

combining two half-working nodes) and served me since 2006 (seven

years!) very well. Today, it was shut off.

It’s nearly historic (single-core!):

benten ~ $ cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 15

model : 1

model name : Intel(R) Pentium(R) 4 CPU 1.50GHz

stepping : 2

cpu MHz : 1495.230

cache size : 256 KB

fdiv_bug : no

hlt_bug : no

f00f_bug : no

coma_bug : no

fpu : yes

fpu_exception : yes

cpuid level : 2

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi m

mx fxsr sse sse2 ss ht tm up pebs bts

bogomips : 2995.24

clflush size : 64

power management:

benten ~ $ free

total used free shared buffers cached

Mem: 775932 766372 9560 0 85720 273792

-/+ buffers/cache: 406860 369072

Swap: 975200 57904 917296

benten ~ $ fdisk -l

Disk /dev/hda: 20.0 GB, 20020396032 bytes

Disk /dev/hdb: 500.1 GB, 500107862016 bytes

Disk /dev/hdc: 80.0 GB, 80026361856 bytes

Disk /dev/hdd: 500.1 GB, 500107862016 bytes

benten ~ $ cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 hdb1[0] hdd1[2](F)

488383936 blocks [2/1] [U_]

Nov32013

Pavan Balaji, Jim Dinan, Rajeev Thakur and I are giving our Advanced MPI Programming tutorial at Supercomputing 2013 on Sunday November 17th.

Are you wondering about the new MPI-3 standard? How it affects you as a scientific or HPC programmer and what nice new features you can use to make your life easier and your application faster? Then you should not miss our tutorial.

Our abstract summarizes the main topics:

The vast majority of production parallel scientific applications today use MPI and run successfully on the largest systems in the world. For example, several MPI applications are running at full scale on the Sequoia system (on ?1.6 million cores) and achieving 12 to 14 petaflops/s of sustained performance. At the same time, the MPI standard itself is evolving (MPI-3 was released late last year) to address the needs and challenges of future extreme-scale platforms as well as applications. This tutorial will cover several advanced features of MPI, including new MPI-3 features, that can help users program modern systems effectively. Using code examples based on scenarios found in real applications, we will cover several topics including efficient ways of doing 2D and 3D stencil computation, derived datatypes, one-sided communication, hybrid (MPI + shared memory) programming, topologies and topology mapping, and neighborhood and nonblocking collectives. Attendees will leave the tutorial with an understanding of how to use these advanced features of MPI and guidelines on how they might perform on different

platforms and architectures.

This tutorial is about advanced use of MPI. It will cover several advanced features that are part of

MPI-1 and MPI-2 (derived datatypes, one-sided communication, thread support, topologies and topology

mapping) as well as new features that were recently added to MPI as part of MPI-3 (substantial additions

to the one-sided communication interface, neighborhood collectives, nonblocking collectives, support for

shared-memory programming).

Implementations of MPI-2 are widely available both from vendors and open-source projects. In addition,

the latest release of the MPICH implementation of MPI supports all of MPI-3. Vendor implementations

derived from MPICH will soon support these new features. As a result, users will be able to use in practice

what they learn in this tutorial.

The tutorial will be example driven, reflecting scenarios found in real applications. We will begin with

a 2D stencil computation with a 1D decomposition to illustrate simple Isend/Irecv based communication.

We will then use a 2D decomposition to illustrate the need for MPI derived datatypes. We will introduce

a simple performance model to demonstrate what performance can be expected and compare it with actual

performance measured on real systems. This model will be used to discuss, evaluate, and motivate the rest

of the tutorial.

We will use the same 2D stencil example to illustrate various ways of doing one-sided communication in

MPI and discuss the pros and cons of the different approaches as well as regular point-to-point communica-

tion. We will then discuss a 3D stencil without getting into complicated code details.

We will use examples of distributed linked lists and distributed locks to illustrate some of the new ad-

vanced one-sided communication features, such as the atomic read-modify-write operations.

We will discuss the support for threads and hybrid programming in MPI and provide two hybrid ver-

sions of the stencil example: MPI+OpenMP and MPI+MPI. The latter uses the new features in MPI-3 for

shared-memory programming. We will also discuss performance and correctness guidelines for hybrid pro-

gramming.

We will introduce process topologies, topology mapping, and the new “neighborhood” collective func-

tions added in MPI-3. These collectives are particularly intended to support stencil computations in a scalable

manner, both in terms of memory consumption and performance.

We will conclude with a discussion of other features in MPI-3 not explicitly covered in this tutorial

(interface for tools, Fortran 2008 bindings, etc.) as well as a summary of recent activities of the MPI Forum

beyond MPI-3.

Our planned agenda for the day is

We’re looking forward to many interesting discussions!

Sep182013

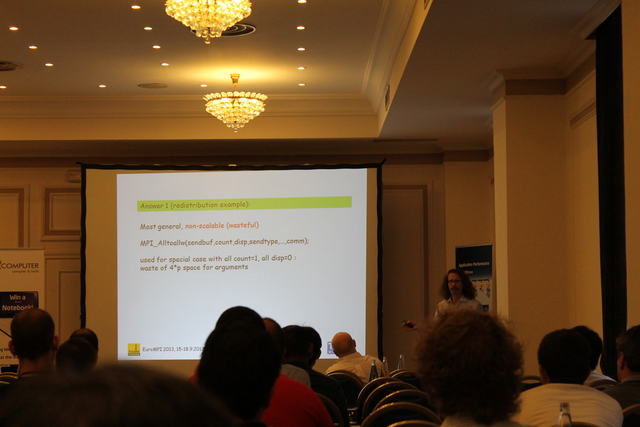

EuroMPI is a very nice conference for the specialized sub-field MPI, namely the Message Passing Interface. I’m a long-term attendee since I’m working much on MPI and also standardization. We had a little more than 100 attendees this year in Madrid and the organization was just outstanding!

We were listening to 25 paper talks and five invited talks around MPI. For example Jesper Traeff, who discussed how to generalize datatypes towards collective operations:

Or Rajeev Thakur, who explained how we get to Exascale and that MPI is essentially ready:

Besides the many great talks, we also had some fun, like the city walking tour organized by the conference

the evening reception, a very nice networking event

or more networking in the Retiro park

followed by the traditional dinner.

On the last day, SPCL’s Timo Schneider presented our award-winning paper on runtime compilation for MPI datatypes

with a provocative start (there were many vendors in the room 🙂

but an agreeing end.

The award ceremony followed right after the talk.

The conference was later closed by the announcement of next year, when EuroMPI will move to Japan (for the first time outside of Europe).

After all, a very nice conference! Kudos to the organizers.

The one weird thing about Madrid though … I got hit in the face by a random woman in the subway on my way back. Looks like she claimed I had stolen her seat (not sure why/how that happened and many other seats were empty) but she didn’t speak English and kept swearing at me. Weird people! 🙂

Jul282013

Since the lab moved to Switzerland, we decided to do a hiking trip in the pre-alps. It’s incredibly beautiful and efficient (only 30 mins by car :-)). We decided to climb mount Rigi wit Timo, Maciej, Tobias, and Natalia. Maciej, our local mountaineer, heroically volunteered to carry all food up :-). Unfortunately, we picked a very hot day (about 35 Celsius). It was of course painful but a lot of fun at the same time :-). Rigi is amazing and Switzerland is absolutely beautiful!

Here are some impressions of our first lab excursion.

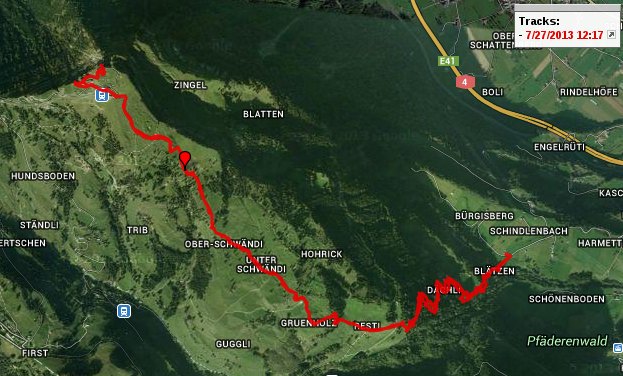

This is our complete trip from top to bottom. More than 1km height difference on about 13 kms. Time to go up ~3:30hrs, time to go down 3:45hrs. Here are some more detailed stats pdf

Before we start! We still look fine 🙂

The first sign, btw., Swiss signage is generally horrible. Rigi is ok, we also had multiple cell phones and even multiple Internet connections :-).

Mac carrying all the stuff, still smiling :-).

First nice view at a lame altitude.

Getting better ….

First hut, still lame altitude. People are having beers here, so can’t be too hard!

Tracking …

And the path vanished somewhat :-/.

Better views.

Strange upwards path (rather steep).

Yes, Mac is alive.

Even better views, we gained some altitude.

Find Mac

Find Mac (easier)

Resti, 1198 meters.

Nice view (and find Mac)

Lame people take the train …

More views.

First rest – nice shade!

Timo doing well (with his new hat)

Water 🙂

Maybe a bit too much.

The whole path was full of barbed wire … it’ll entangle you and rescue you if you fall down :-).

Yes, even at tight passages … a bit weird.

Discussing animals :-).

Some locals.

Birds coming rather close. I didn’t have the energy to get the good lens out of the bag, sorry!

More views. The right one could be our next target!

The top … 3hrs upstairs.

And then the lame surprise … completely commercialized, a train station and tons of overweight tourists. Btw., we saw *NOBODY* walking up even though we moved slowly. Some people were coming down but they looked way too fresh (they must have taken the train up).

The train.

Very nice unobstructed views towards Germany.

Lake Zug (I think).

The alps (more hiking!).

Timo found a friend.

Love at first sight (look at the ears).

Our new lab member, donk.

Yes, we made it!

Military drill to pass electrified fences :-).

Views ….

More locals watching us.

Our hickory fridge. So it was 35 Celsius and we walked for four hours or more. How do you prevent your barbecue meat from going bad? Well, Mac had the rescuing idea: deep-freeze everything and pack six bottles of frozen water into a thermo-bag. It lasted very well, all meat was still slightly frozen. Even better, we had nicely chilled water for the way back :-).

Professional barbecue equipment :).

Food.

More food.

Cervelas on the fire.

And Hamburgers on the grill.

Goooooood!

Back at the fun :-).

Good teamwork.

Beautoful views all over.

.. and everything prepared to survive.

On the way back.

More views.

Don’t fall :-).

Find Tobias and Timo.

Making contact with the locals.

Ohoh …

Is the cow laughing?

Ok, it’s not hostile.

Nature.

Getting late … but still ok.

The target!

And done … it was a rather nice trip. We may do it again :-). Thanks all for making it so much fun!