The Intl. Supercomputing (SC) conference is clearly the main event in HPC. It’s program is broad and more than 10k people attend annually. SPCL is mainly focused on the technical program which makes SC the top-tier conference in HPC. It is the main conference of a major ACM SIG (SIGHPC).

This year, SPCL members co-authored three technical papers in the very competitive program with several thousand attendees! One was even nominated for the best paper award — and to take it upfront, we got it! Congrats Maciej! All talks were very well attended (more than 100 people in the room).

All of these talks were presented by collaborators, so I was hoping to be off the hook. Well, not quite, because I gave seven (7!) invited talks at various events and participated in teaching a full-day tutorial on advanced MPI. The highlight was a keynote at the LLVM workshop. I was also running around all the time because I co-organized the overall workshop program (with several thousand attendees) at SC14.

So let me share my experience of all these exciting events in chronological order!

1) Sunday: IA3 Workshop on Irregular Applications: Architectures & Algorithms

This workshop was very nice. Kicked off by top-class keynotes from Onur Mutlu (CMU) and Keshav Pingali (UT) through great paper talks and a panel in the afternoon. I served on the panel with some top-class people and it was a lot of fun!

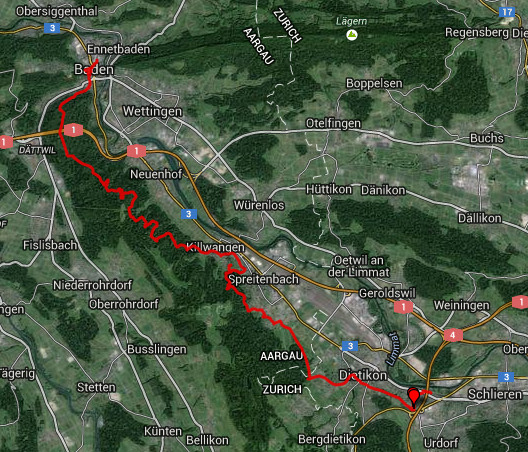

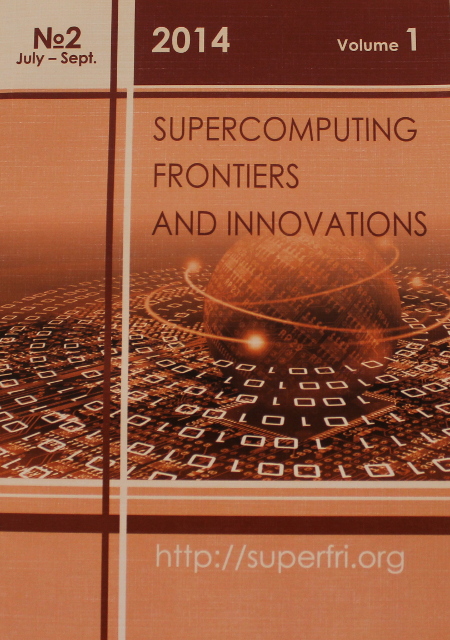

Giving my panel presentation on accelerators for graph computing.

Arguing during the panel discussion (Hadoop right now) with (left to right): Keshav Pingali (UT Austin), John Shalf (Berkeley), me (ETH), Clayton Chandler (DOD), Benoit Dupont de Dinechin (Kalray), Onur Mutlu (CMU, Maya Gokhale (LLNL). A rather argumentative group :-).

My slides can be found here.

2) Monday – LLVM Workshop

It was long overdue to discuss the use of LLVM in the context of HPC. So thanks to Hal Finkel and Jeff Hammond for organizing this fantastic workshop! I kicked it off with some considerations about runtime-recompilation and how to improve codes.

The volunteers counted around 80 attendees in the room! Not too bad for a workshop. My slides on “A case for runtime recompilation in HPC” are here.

3) Monday – Advanced MPI Tutorial

Our tutorial attendee numbers keep growing! More than 67 people registered but it felt like more were showing up for the tutorial. We also released the new MPI books, especially the “Using Advanced MPI” book which shortly after became the top new release on Amazon in the parallel processing category.

4) Tuesday – Graph 500 BoF

There, I released the fourth Green Graph 500 list. Not much new happened on the list (same as for the Top500 and Graph500) but the BoF

was still fun! Peter Kogge presented some interesting views on the data of the list. My slides can be found here.

5) Tuesday – LLVM BoF

Concurrently with the Graph 500 BoF was the LLVM BoF, so I had to speak at both at the same time. Well, that didn’t go too well (I’m still only one person — apologies to Jim). I only made 20% of this BoF but it was great! Again, very good turnout, LLVM is certainly becoming more important every year. My slides are here.

6) Tuesday – Simulation BoF

There are many simulators in HPC! Often for different purposes but also sometimes for similar ones. We discussed how to collaborate and focus our efforts better. I represented LogGOPSim, SPCL’s discrete event simulator for parallel applications.

My talk summarized features and achievements and slides can be found here.

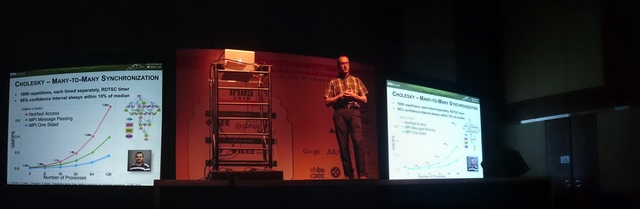

7) Tuesday – Paper Talk “Slim Fly: A Cost Effective Low-Diameter Network Topology”

Our paper was up for Best Student Paper and Maciej did a great job presenting it. But no need to explain, go and read it here!

Maciej presenting the paper! Well done.

8) Wednesday – PADAL BoF – Programming Abstractions for Data Locality

Programming has to become more data-centric as architectures evolve. This BoF followed an earlier workshop in Lugano on the same topic. It was great — no slides this time, just an open discussion! I hope I didn’t upset David Padua :-).

Didem Unat moderated and the panelists were — Paul Kelly (Imperial), Brad Chamberlain (Cray), Naoya Maruyama (TiTech), David Padua (UIUC), me (ETH), Michael Garland (NVIDIA). It was a truly lively BoF :-).

But hey, I just got it in writing from the Swiss that I’m not qualified to talk about this topic — bummer!

The room was packed and the participation was great. We didn’t get to the third question! I loved the education question, we need to change the way we teach parallel computing.

9) Wednesday – Paper Talk “Understanding the Effects of Communication and Coordination on Checkpointing at Scale”

Kurt Ferreira, a collaborator from Sandia was speaking on unexpected overheads of uncoordinated checkpointing analyzed using LogGOPSim (it’s a cool name!!). Go read the paper if you want to know more!

Kurt speaking.

10) Thursday – Paper Talk “Fail-in-Place Network Design: Interaction between Topology, Routing Algorithm and Failures”

Presented by Jens Domke, a collaborator from Tokyo Tech (now at TU Dresden). A nice analysis of what happens to a network when links or routers fail. Read about it here.

Jens speaking.

11) Thursday – Award Ceremony

Yes, somewhat unexpectedly, we go the best student paper award. The second major technical award in a row for SPCL (after last year’s best paper).

Happy :-).

Coverage by Michele @ HPC-CH and Rich @ insideHPC.