The Open Fabrics Alliance just released a new version of then Open Subnet Manager including our Deadlock-Free SSSP Routing for InfiniBand (DFSSSP) routing algorithm [2]!

This new version fixes several minor bugs, adds the support for base/enhanced switch port 0 and improves the routing performance further, but lacks support for multicast routing (see ‘Update’ below).

DFSSSP is a new routing algorithm that can be used to route InfiniBand networks with OpenSM 3.3.16 [1] and later. It performs generally better than the default Min Hop algorithm and avoids deadlocks by routing through different virtual lanes (VLs). Due to the above-mentioned problems, we don’t recommend to use the DFSSSP routing algorithm which is included in the OFED 3.2 and 3.5 releases.

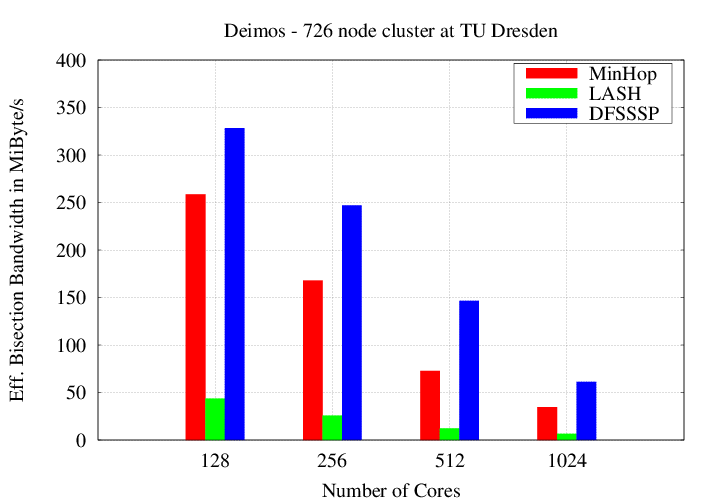

DFSSSP can lead to up to 50% higher routing performance for dense (bisection-limited) communication patterns, such as all-to-all and thus directly accelerates dense communication applications such as the Graph500 benchmark [4]. The following figure shows a direct comparison with other routing algorithms on a 726 node cluster running MPI with 1 process (in the 1024 process case, some nodes have two processes) per node:

This comparison uses Netgauge’s effective bisection bandwidth benchmark, an approximation of the real bisection bandwidth of a network.

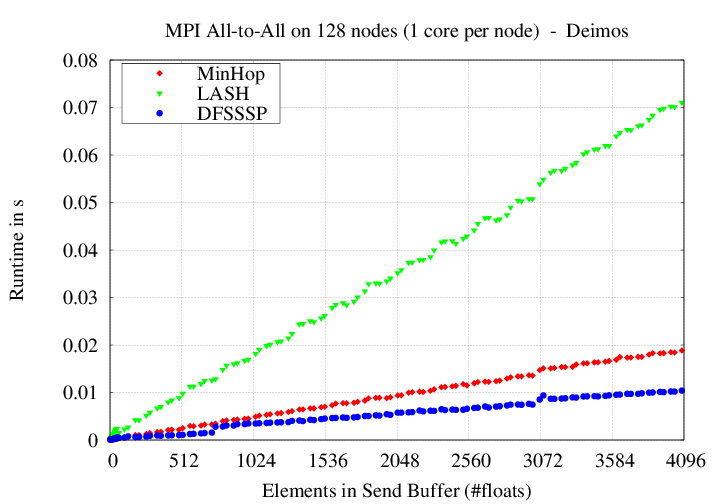

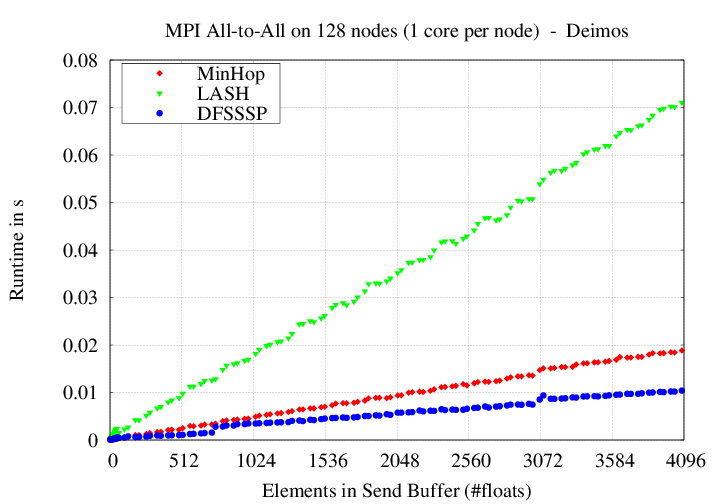

MPI_Alltoall performance is similarly improved over Min Hop and LASH routing as can be observed in the following figure (using 128 nodes):

The new DFSSSP algorithm can be used with OpenSM version 3.3.16 starting it with ‘-R dfsssp’ on the command line or setting ‘routing_engine dfsssp’ in the configuration file. Despite the configuration of the routing algorithm, you will have to enable QoS with an uniform distribution (see [A1]) and you will have to enable service level query support within your MPI environment (see [A2] for OpenMPI).

You should compare the bandwidth yourself. Effective bisection bandwidth and all-to-all can be measured with Netgauge, however, real application measurements are always best!

Now you may be wondering why DFSSSP is faster than Min Hop since Min Hop is already minimizing the number of hops between all endpoints. The trick is that DFSSSP optimizes the *global bandwidth* in addition to the distance between endpoints. This is achieved with a simple greedy algorithm described in detail in [3]. Deadlock-freedom is then added by using different virtual lanes for the communication as described in [2]. By the way, Min Hop does not guarantee deadlock freedom! If you want to know more, read [2] and [3] or come to the HPC Advisory Council Switzerland Conference 2013 conference in March where I’ll give a talk about the principles behind DFSSSP and how to use it in practice.

DFSSSP is developed in collaboration between the main developer Jens Domke at the Tokio Institute of Technology, and Torsten Hoefler of the Scalable Parallel Computing Lab at ETH Zurich.

[1]: opensm-3.3.16.patched.tar.gz

[2]: J. Domke, T. Hoefler and W. Nagel: Deadlock-Free Oblivious Routing for Arbitrary Topologies

[3]: T. Hoefler, T. Schneider and A. Lumsdaine: Optimized Routing for Large-Scale InfiniBand Networks

[4]: Graph 500: www.graph500.org

[5]: openmpi-1.6.4.patched.tar.gz

[A1] Possible QoS configuration for OpenSM + DFSSSP with 8 VLs:

qos TRUE

qos_max_vls 8

qos_high_limit 4

qos_vlarb_high 0:64,1:64,2:64,3:64,4:64,5:64,6:64,7:64

qos_vlarb_low 0:4,1:4,2:4,3:4,4:4,5:4,6:4,7:4

qos_sl2vl 0,1,2,3,4,5,6,7,0,1,2,3,4,5,6,7

[A2] Enable SL queries for the path setup within OpenMPI:

a) configure OpenMPI with "--with-openib --enable-openib-dynamic-sl"

b) run your application with "--mca btl_openib_ib_path_record_service_level 1"

PS: we experienced some trouble with old HCA firmware, which did not support sending userspace MAD request on VLs other than 0.

You can test the following command (as root) on some nodes and see if you get an response from the subnet manager:

saquery -p --src-to-dst LID1:LID2

In case the command stalls or returns with an error you might have to update the firmware.

Update:

The fix for multicast routing has been implemented and tested. Please, use our patched version of opensm-3.3.16 (see [1]) instead of the default version from the OFED websites. Besides the multicast patch, this version contains a slightly enhanced implementation of the VL balancing. Future releases by the Open Fabrics Alliance (>= 3.3.17) will be shipped with both patches.

Besides the multicast problem, we have identified a bug in OpenMPI related to the connection management of the openib BTL. We provide a patched version of OpenMPI as well (see [5]).