Home

Publications

Awards

Research

NB Collectives

MPI Topologies

MPIParMETIS

LibTopoMap

MPI Datatypes

Netgauge

Network Topologies

Ethernet BTL eth

ORCS

DFSSSP

Older Projects

cDAG

LogGOPSim

CoMPIler

Teaching

Miscellaneous

Full CV [pdf]

ew-hoefe

BLOG

bio

Events

Past Events

|

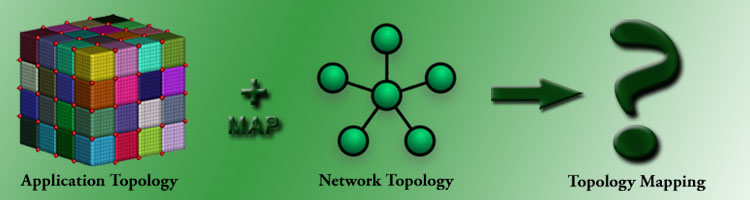

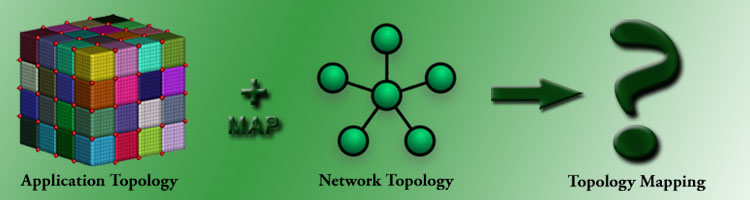

LibTopoMap - A Generic Topology Mapping Library

LibTopoMap is a library that enables generic topology mapping for

arbitrary process topologies to arbitrary network topologies. Currently,

it supports MPI's distributed graph topology [1] but it can be adopted

to provide topology mapping functions for any parallel programming runtime.

The current implementation is serial, i.e., mappings are computed on each

rank of the parallel allocation. Libtopomap uses different starting values

for greedy mapping and different strategies on different processes to

use the available parallelism in the run. The processes then agree on the

single best solution by either a minimal average dilation or minimal

maximum congestion metric. The library supports weighted application

graphs and heterogeneous networks (different bandwidths in different links).

The library supports multicore with k-way partitioning and provides four

mapping algorithms: (1) Greedy, (2) Recursive, (3) RCM, and (4) SCOTCH

static mapping. Simulated annealing can be used to improve the found

solution further. A detailed description and evaluation can be found in [2].

LibTopoMap requires ParMETIS and supports SCOTCH.

|

|

Download LibTopoMap:

|

|

Building LibTopoMap

- unpack tgz

- edit Makefile.inc and set CXX and ParMETIS (and optionally SCOTCH) paths correctly

- METIS may not support linking to C++, thus, a patch adding 'extern "C"' statements may be necessary, for example, for metis-4.0: metis_4.0-extern_c-patch.diff - (1.62 kb)

- make

- build sparse matrix test: cd matvec-test; make

|

|

Running the Sparse Matrix Vector test

This example explains how to run the sparse matrix-vector multiplication

mapping on a set of processes with a 3x3x3 torus topology. Topology map

and process-to-node mapping ("fake mapping") are delivered with the

package. We describe how to create and evaluate arbitrary network graphs

and multicore below.

- download and unpack a matrix from UFL collection.

e.g., $ wget http://www.cise.ufl.edu/research/sparse/MM/GHS_indef/aug2dc.tar.gz

- start 12 processes with aug2dc.mtx, the topology file ../3x3x3.map, pretending they run on hosts in ../3x3x3.fake.

e.g., $ mpirun -n 12 ./reader 0 ./aug2dc/aug2dc.mtx ../3x3x3.map ../3x3x3.fake

|

|

Using LibTopoMap

LibTopoMap has multiple ways for determining which node a process runs

on. It supports the native interface on BlueGene/P to determine the

Torus topology of the system and the location of each process. For other

architectures, LibTopoMap reads a file that specifies an adjacency list

of the complete physical network topology. Each node is identified by

its hostname in the file and LibTopoMap calls gethostname() to retrieve

the name of the node a process is running on. Thus, hostnames must be

unique in the network.

The next section describes the format of the topology map file by

example:

Format of the Topology Map File (unweighted)

The first example defines a simple ring (direct) network with 3 nodes

called "host0", "host1", and "host2":

num: 3

0 host0

1 host1

2 host2

0 1 2

1 2 0

2 0 1

The second example defines a central switch-based (indirect) network

with 3 nodes called "host0", "host1", and "host2" and one switch.

num: 4

0 host0

1 host1

2 host2

3 switch

0 3

1 3

2 3

3 0 1 2

The format of the topology files is as follows:

- Line 1 specifies number of vertices (switches or endpoints) in the network

- Line 2 to <nvertexes>+1 lists the hostnames of each vertex (the mapper library will identify where each process is located by gethostbyname())

- Line <nvertexes>+1 to 2*<nvertexes>+1 lists the adjacency list of each vertex

The distribution includes a 3x3x3 torus example (3x3x3.map).

Format of the Topology Map File (weighted)

LibTopoMap also supports heterogeneous topologies with different

bandwidths (edge weights) for each edge. The weights are integer values.

If the actual bandwidths are not integer, then the input should scale the

weights until they are relative integer values (e.g., if a bandwidth is

15.5 GB/s, one would scale every bandwidth by a factor of 10 for the graph

input). A weighted graph simply adds "w" after the number of nodes and

specifies the integer weight of each connection in brackets in the adjacency

list. See the simple ring example below:

num: 3w

0 host0

1 host1

2 host2

0 1(1) 2(1)

1 2(1) 0(1)

2 0(1) 1(1)

All links have the same bandwidth in this example (which is thus

equivalent to an unweighted specification).

Possible Extension for Routes

LibTopoMap may handle routes at some point, the envisioned format for

specifying routes (in a separate file) is to list the route from each

source to each destination explicitly.

The format would be a similar textfile with the first line specifying the

number of hosts N and the next N*(N-1) lines specifying the routes between

all ordered host pairs. For example:

num: 3

0 1 1

0 2 1 2

1 0 0

1 2 2

2 0 0

2 3 0 3

The first two integers are the source and destination and the next integers

until a line break are the nodes to traverse from source to destination.

Fake Node Allocations

Sometimes, it is not possible to run the topomapper on the target

system. Thus, the library allows a simulation mode where the user

specifies a mapping of processes to nodes in a so-called "fake file".

The format is simply: "<rank> <hostname>" per line.

The user can also emulate a multi-core allocation (multiple processes

pre node in the physical topology).

Single-core allocation (process 0 to host0 etc.):

0 host0

1 host1

2 host2

Dual-core allocation (process 0+1 to host0 etc.):

0 host0

1 host0

2 host1

3 host1

4 host2

5 host2

The distribution includes a fake file for the 3x3x3 torus topology

(3x3x3.fake).

Environment Variables

The behavior of LibTopoMap can be influenced with several environment

variables. LibTopoMap uses the prefix TPM_.

- TPM_STRATEGY - select the mapping strategy (either none, greedy, recursive, rcm, or scotch)

- TPM_ANNEAL - enable (=yes) or disable simulated annealing to improve solution

- TPM_PARMETIS - enable (=yes) partitioning the multicore allocation before mapping with METIS k-way partitioning (only applicable if all nodes have the same number of processes)

- TPM_FIX_PARMETIS - enable (=yes) balancing the resulting k-way partitioned graph (necessary for RCM or load balancing, is enabled automatically if necessary)

- TPM_FIX_GRAPH - enable (=yes) to make input graph symmetric (necessary for RCM or load balancing, is enabled automatically if necessary)

|

References

| CCPE | [1] Torsten Hoefler, Rolf Rabenseifner, H. Ritzdorf, Bronis R. de Supinski, Rajeev Thakur and Jesper Larsson Träff: | | | The Scalable Process Topology Interface of MPI 2.2 Concurrency and Computation: Practice and Experience. Vol 23, Nr. 4, pages 293-310, John Wiley & Sons, Ltd., ISSN: 1532-0634, Aug. 2010,   |

|